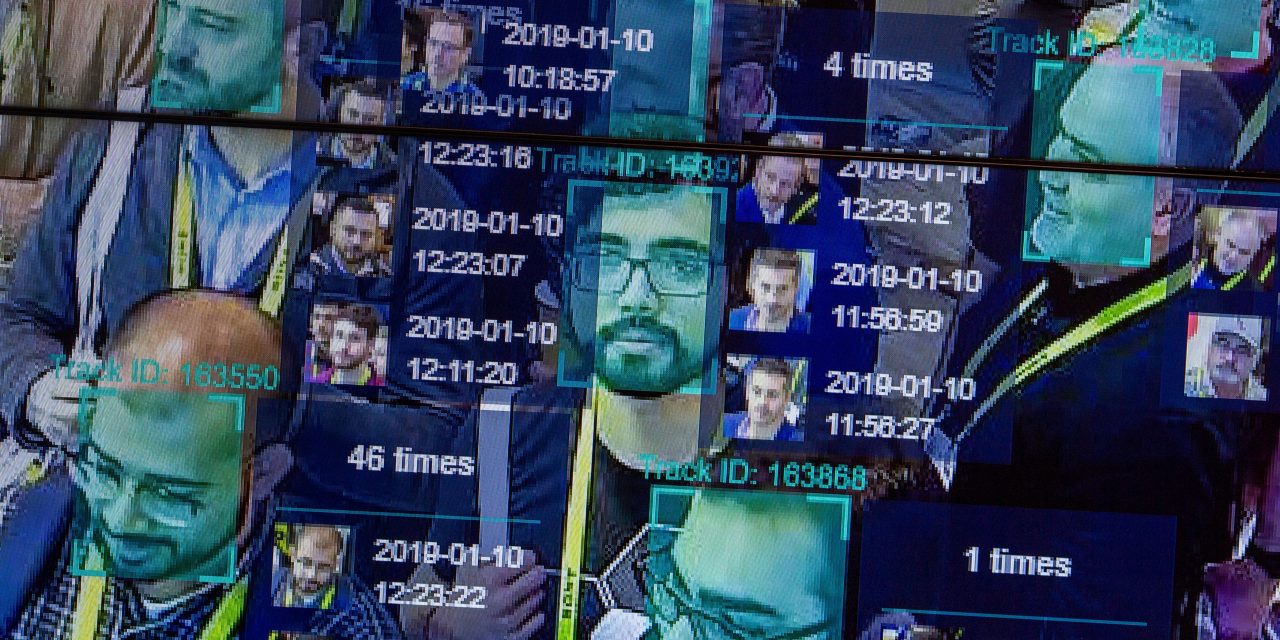

A live demonstration uses facial recognition technology at the Las Vegas Convention Center. (Getty Images) Private company, ACLU both back S.F. ordinance banning use by authorities The city of San Francisco drew a line in the sand for the nascent facial recognition industry with a new city ordinance that bans the technology from being used by law enforcement or other authorities. The city is one of the first to block the software from being deployed, calling the technology “psychologically unhealthy” and, if allowed, “the beginnings of a surveillance state,” according to city supervisor Aaron Peskin, who sponsored the bill. Similar bans are under consideration in Massachusetts and other states. The ban does not affect the use of the technology by private companies. Mary Haskett is the co-founder of Blink Identity, one of the leading facial recognition software companies testing its software in North American venues and a partner of Live… Continue Reading What Ripples Will Limits on Facial Recognition Cause?

What Ripples Will Limits on Facial Recognition Cause?